This is known as "depth of field. "

In photography, the more light the lens lets in, the less of the image not at the precise focus point will be in focus.

As Apple has worked to improve night mode, they've also gone to lenses with higher light sensitivity, with the downside that depth of field is slightly reduced.

So when you say that areas far from the focus point of the photo are sharper on your iPhone XS, you are actually somewhat correct:

iPhone 13 Pro Max Wide: ƒ/1.5 aperture

iPhone XS Wide: ƒ/1.8 aperture

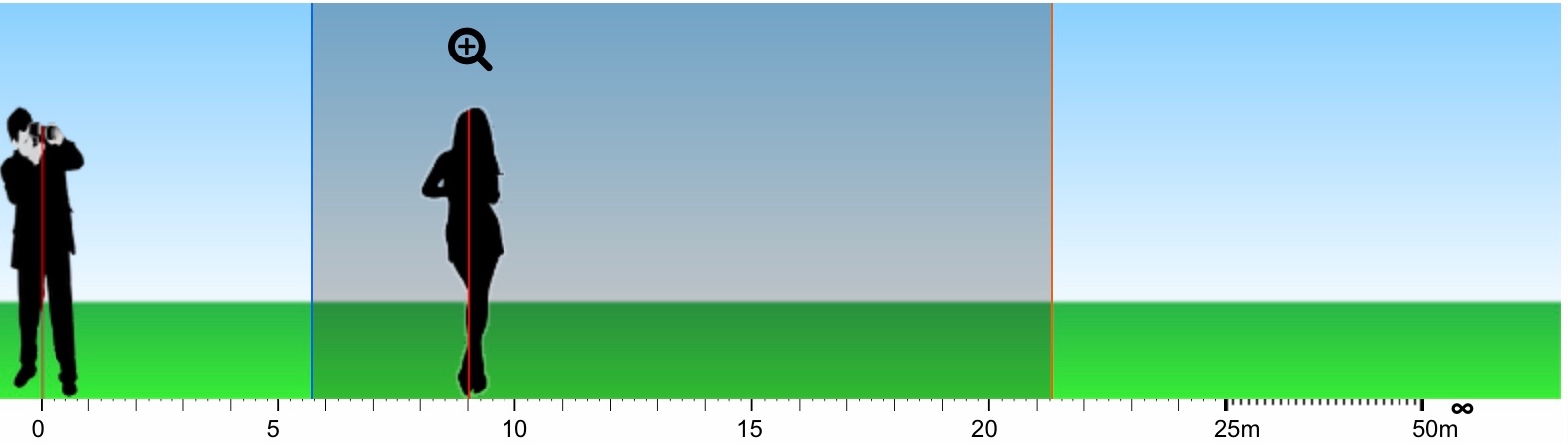

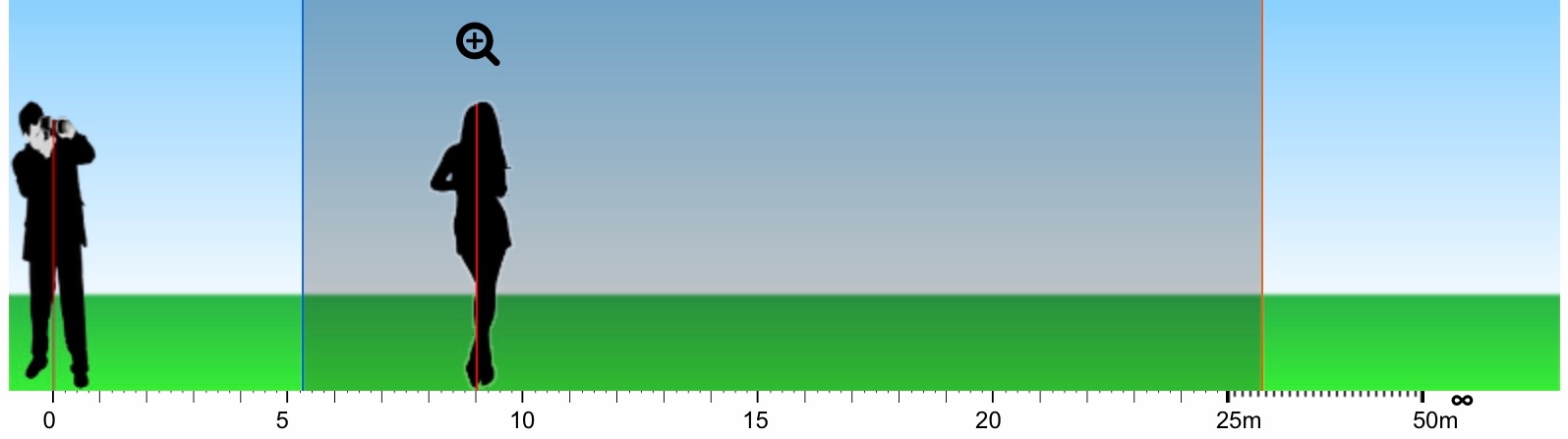

This isn't completely accurate, but it's a rough simulation.

Say you focused on a model 9 meters from the camera; this shows approximately how much of the image would be in perfect focus based upon distance:

iPhone 13 Pro Max:

iPhone XS:

If the model is further away, 25m, you can see that for the iPhone 13 Pro Max, objects closer than about 9.5m would start to blur:

For the iPhone XS, objects wouldn't start to blur until they were closer than about 8.5m:

The bottom line is all these devices are photographic tools, and whether it's an iPhone or a $3000 professional camera with a $2000 lens, for best results you need to work within the optical parameters of the system you have in hand.